Everyone who has ever used a smartphone or any modern mobile device, and has had the joy of the millions of apps at our fingertips, has undoubtedly also had the experience of an app ruined by a poor user interface (UI). Product and user experience (UX) designers often have to go through several rounds of testing, interviewing, and analysis with multiple iterations of the product, feature, or design before they release something that is deemed user-friendly. For that reason, designers and developers are interested in a number of questions in today’s ever-changing technological landscape to help them through the design process. Questions we are often asked revolve around iMotions’ ability to help with the most sought-after questions in phone and app testing. Questions such as

- How do people interact with various kinds of content, particularly in today’s day and age where mindless scrolling is how we get recommendations, connect to people, and inform ourselves about world events?

- How do apps influence how we participate in society, and engage with social and political events and changes?

- How are websites viewed on a small phone versus a computer screen?

- Do people read the text all the way through or is there an optimal amount of text and pictures to retain people’s attention?

Biosensors are an amazing way to study concepts like attention, emotional reactions, website design, and all kinds of UX testing.

To help to test these questions, Smart Eye and iMotions developed a mobile stand where you can use your Smart Eye eye trackers for phone and app testing. This means you can get all the benefits of using your screen-based eye-tracker, on a phone.

The reason we created a mobile stand

Before we proceed with talking about how to test on your mobile phone, it’s worth taking a minute to understand why the development of what looks like plastic on metal is actually a big undertaking. A regular screen-based eye-tracker assumes the eye-tracker is below the screen and the participant is at a certain distance from said screen.

With this knowledge, the tracker can compute the angle the eyes of the participants make when looking at different parts of the screen. Even though this is oversimplifying the complex math an eye-tracker carries out, it is enough to appreciate that using this same piece of hardware above a phone instead of a computer requires some cool behind-the-scenes developments.

First, imagine using a phone and imagine an eye-tracker under the phone. Boom, done! No need for a mobile stand, right? This might make sense in your imagination, but there is one critical difference between eye tracking on a phone versus on a desktop screen: the fact that you’re using your hands. Participants will probably use their hands to swipe and interact with the phone, and in practice, you’ll find that if the eye tracker is placed beneath the phone, the hands will obstruct the eye tracker resulting in lost data. The intrinsic form factor of how we use a mobile device requires a special kind of setup if we want to get good eye tracking data.

Second, the dimensions of a phone are very different from a computer screen. Not only is the screen smaller, but we hold a phone at a different angle than say a laptop. The eye tracker settings take this angle into account when calculating gaze position, so being able to control the tracker’s position, angle, and height relative to the screen is essential to make the most accurate gaze calculations when moving the tracker from a monitor to a phone and vice-versa.

The use of a mobile stand, therefore, strikes the perfect balance between controlled experimentation and ecological validity – you can test mobile content in the environment for which it was intended, but without compromising data quality. The fact that you can use the same Smart Eye tracker for both screen and phone also makes things much easier.

UX research with the mobile stand – How to test on your phone

So how can we use the mobile stand with iMotions in UX research? We ran a study asking participants to do two tasks, 1. Read one of our blog posts on facial expression analysis and 2. Scroll through the iMotions Instagram account. We chose these tasks to highlight some of the key behaviors that our clients like to study, such as social media scrolling, website navigation, and viewing ads in embedded content to name a few.

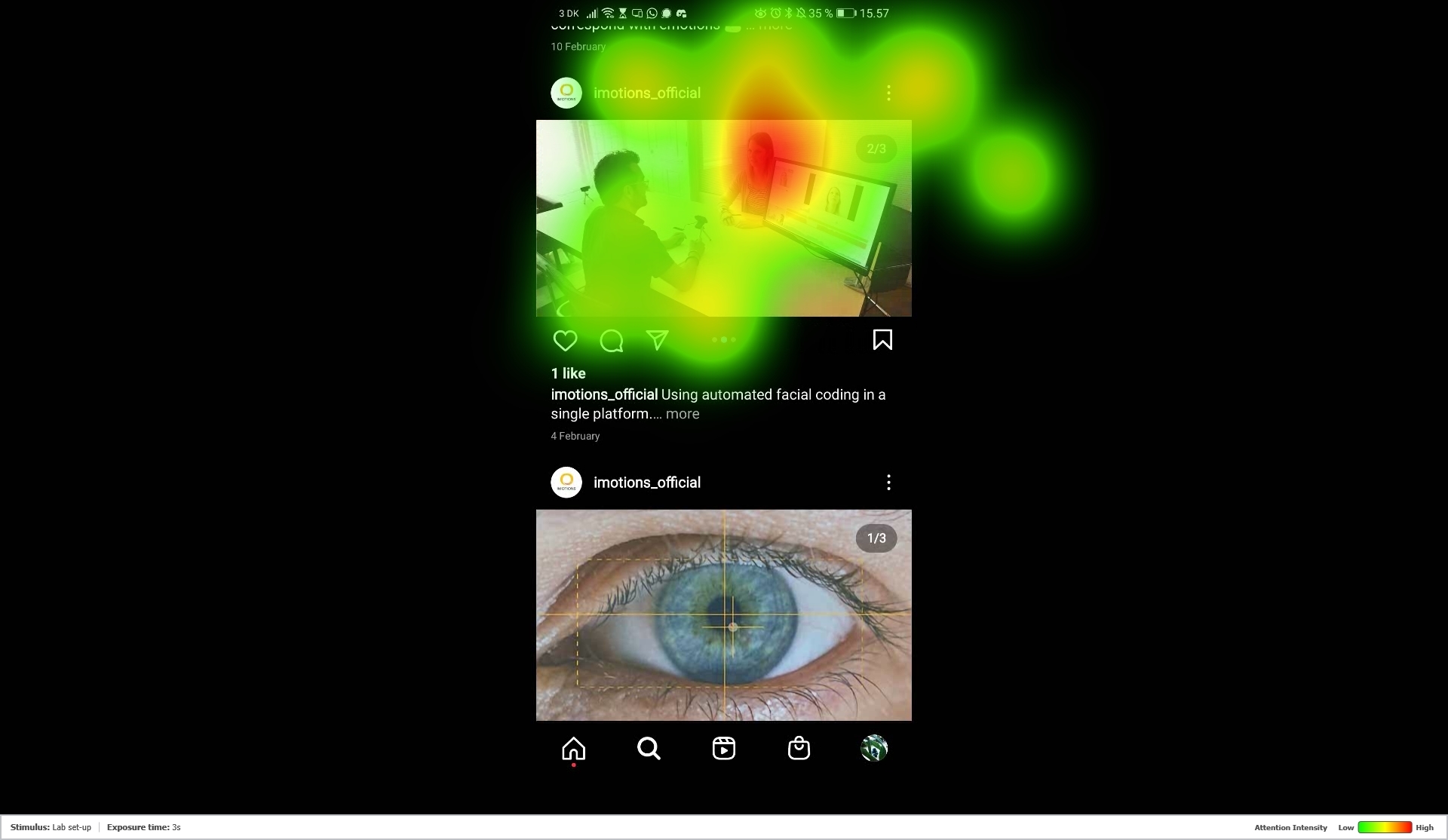

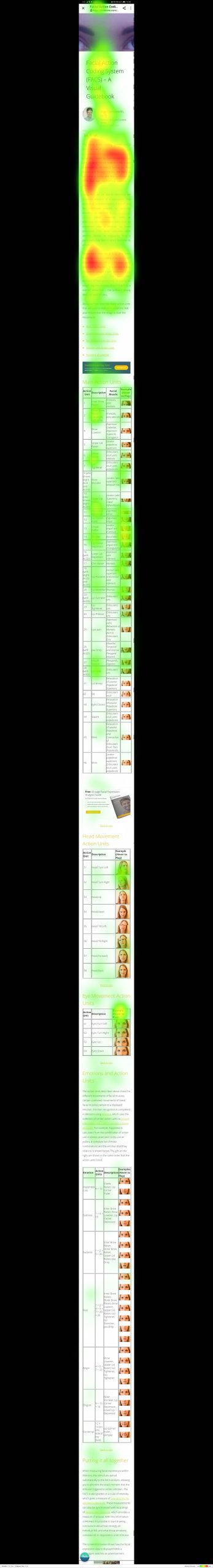

The first question we asked is – if we were only interested in one image in tons of scrollable content, could we isolate and look at this? The answer is of course, yes. In the image below/to the side we see a heatmap on an image of a typical lab set-up with iMotions Desktop. This was one of many images on our Instagram account. But by using iMotions’ Gaze Mapping tool, we were able to select the image we were interested in and use computer vision to map the dynamic moving gaze during scrolling behavior onto this image. This enables us to aggregate the eye tracking data from multiple participants in order to generate this heatmap.

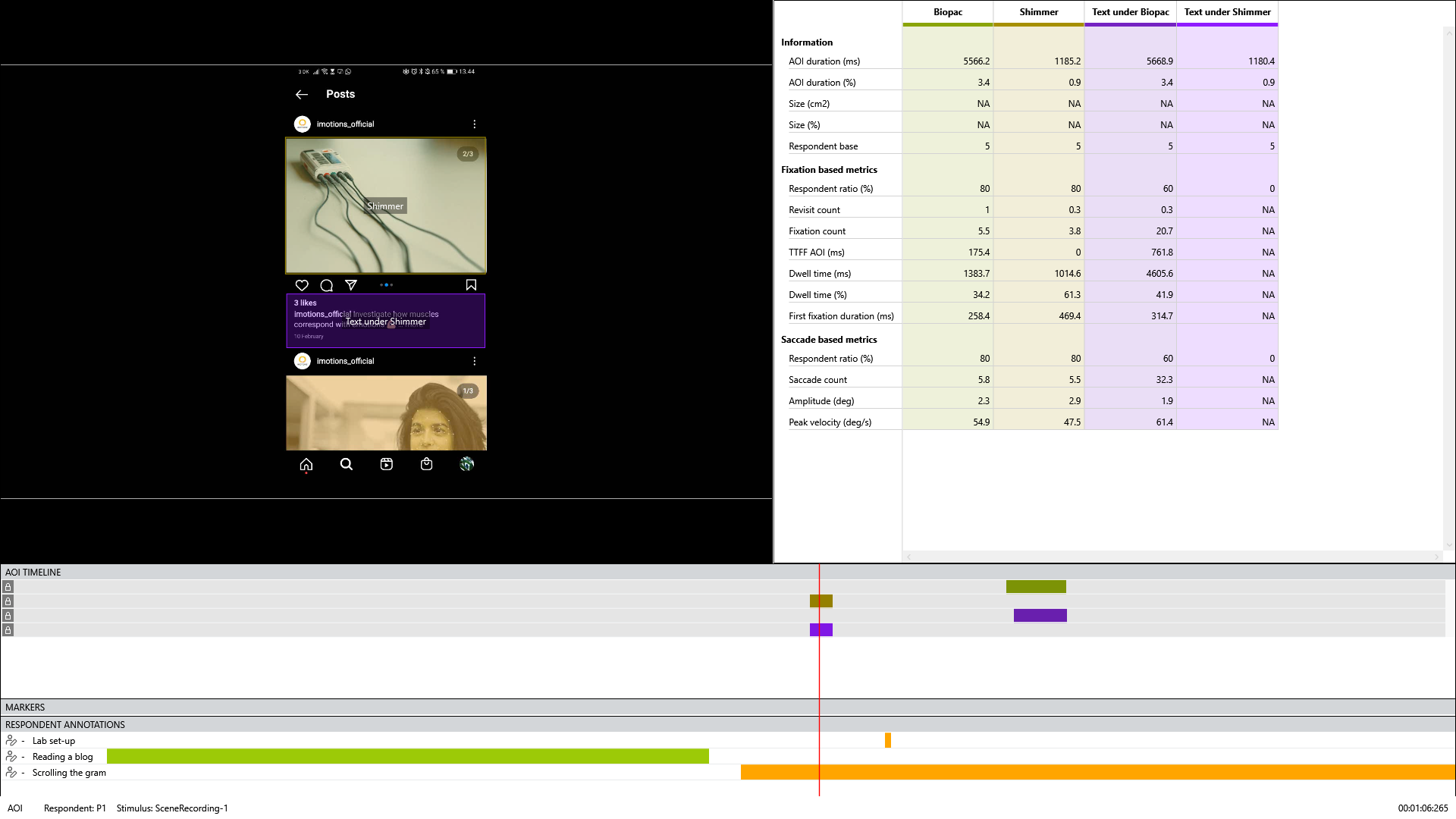

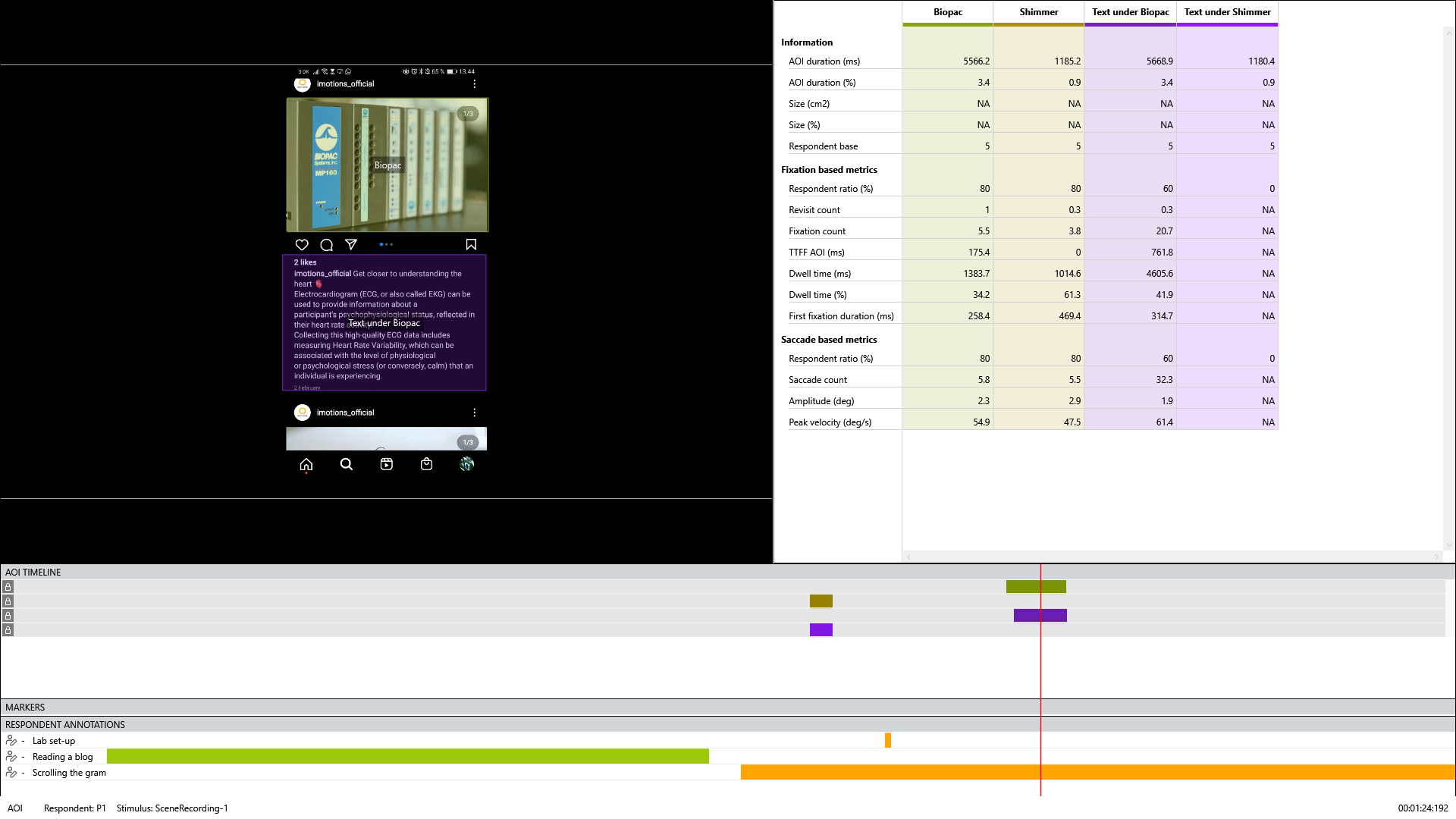

Next, we wanted to study scrolling behaviors and evaluate how difficult this would be to analyze. As we all know by now, endless scrolling can produce a never-ending stimulus, making aggregation and analysis difficult. To add to this complication, people scroll at different speeds, choose to pause at different points, and take different paths to accomplish a task. In other words, there are individual differences in what people attend to, what people ignore, what grabs attention, and what sustains it. A good way to zero in on specific elements to analyze is by using areas of interest (AOIs). In iMotions, you can have dynamic AOIs that you can move on the screen during analysis to reflect the scrolling behavior of each of your participants. As an example, we wanted to compare two of the sensors we integrate with for Galvanic Skin Response – the Biopac and the Shimmer. As can be seen in the AOI analysis, both sensors caught most of our participants’ attention, with 80% of our participants looking at both devices. However, while participants spent 34% of their time looking at the Biopac image, they spent almost 42% of their time reading the description of this mammoth piece of hardware. With the Shimmer though, no one felt the need to read the text but spent 61% of their time looking at the device. The picture of the Shimmer was definitely more photogenic than the Biopac, but participants were more likely to engage in the content under the Biopac picture than the Shimmer image.

Another common question we get is how text and images interact with each other. For this, we chose our blog on facial expression analysis which has an entire sub-section on the various expressions measured by the algorithm. This information uses both text and images to illustrate what facial expressions can be measured. Let’s say we want to generate a heatmap to see whether participants focus more on the text or the image guides, but we have to find a way to aggregate across such a long scrolling stimulus. Such study designs are common for websites, manuals, instructions, and other usability studies. Again, Gaze Mapping helps us to create a heatmap of the entire FEA guide. In this case, it can account for the fact that our participants are scrolling and creating a heatmap on a reference image that is actually a screenshot of the entire document. (Article continues after image).

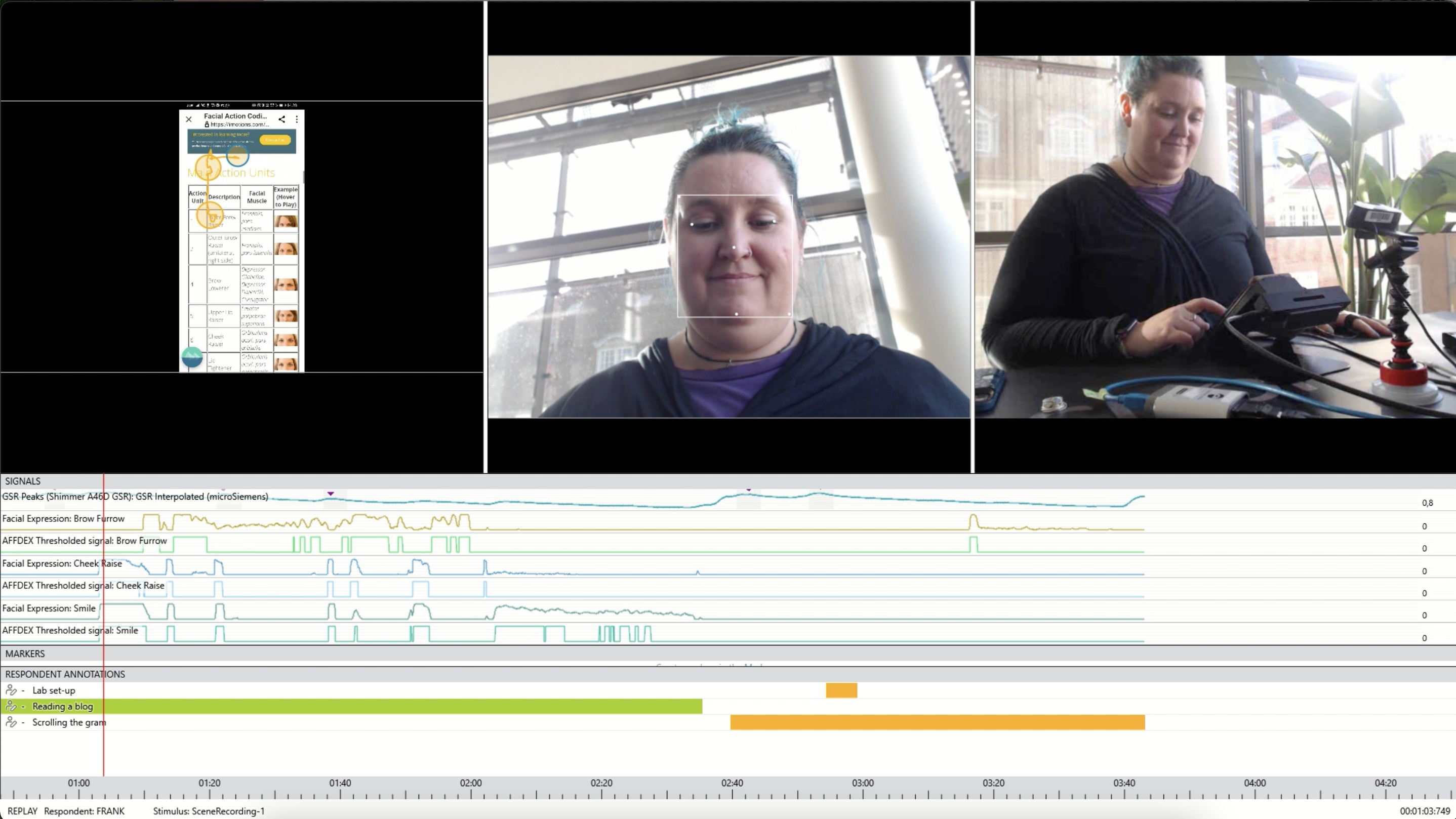

Of course, the best way to understand emotions and preferences beyond just what people were looking at is to go multimodal, which is what we did as well. A webcam for facial expression analysis can easily be mounted on a tripod behind the mobile stand, and participants can wear a Shimmer device on their non-swiping hand to measure GSR. Here you can see one of our participants as they try making the facial expressions they are reading about alongside GSR peaks highlighted in gray showing their level of emotional arousal towards the text.

Across participants, multimodal research can give additional insights into how engaged people were with the content they were interacting with, what were they paying attention to, and the nature of that engagement in terms of valence and intensity.

As you can see, the use of a mobile stand allows researchers to test mobile content on an actual phone, without compromising data quality by collecting data outside of a controlled desktop environment. When paired with iMotions’ powerful eye tracking analytics including Gaze Mapping and Dynamic AOIs, there are now no limits to the kinds of studies you can run on mobile devices.

Eye Tracking

The Complete Pocket Guide

- 32 pages of comprehensive eye tracking material

- Valuable eye tracking research insights (with examples)

- Learn how to take your research to the next level

Free 42-page Facial Expression Analysis Guide

For Beginners and Intermediates

- Get a thorough understanding of all aspects

- Valuable facial expression analysis insights

- Learn how to take your research to the next level