Currently, software and hardware manufacturers are increasingly developing tools that allow users to conduct human behavior research using more modalities at once, for example by combining eye tracking with facial expression analysis or EEG. As a result, research not only becomes more convenient, it also becomes more efficient and precise.

As the market for biosensor research grows, one of the most prevalent terms for describing research with two or more biosensors is, therefore “multimodal research,” but the term can actually mean a few different things, which we cover in this post as it relates to our iMotions software.

In the past when researchers wanted to collect data from several different biosensors at once they would have to use one lab station per biosensor, or modality. This “one sensor, one station” approach is affectionately nicknamed “The Frankenstein Lab”. Much like its cinematic eponym, it is alive (!) only through an unnecessary amount of effort and inconvenience – both during data collection and subsequent data analysis. Aligning timestamps and annotations as well as processing data often has to be done manually to the detriment of study accuracy and efficiency. Multimodal research software does away with most inconveniences, but in iMotions, we remove them almost completely.

With iMotions, multimodal research can be done with several biosensors, or modules, with each individual piece of hardware connected to a single computer or lab station. When we talk about multimodal research software here at iMotions, then our definition therefore is; a software program that can integrate several biosensors into one single computer through one piece of dedicated software.

What makes multimodality valuable in research?

Aside from the convenience of working in one dedicated software environment and the significant improvement in research effectiveness, there is one other aspect that makes it invaluable; it is the only way to conduct truly in-depth anthropocentric human behavior research.

A number of customers choose to buy a full multimodal lab from the beginning of their research, while others start smaller. There can be many reasons for starting small with a single module, chief among which is the financial, but it will garner limited results when compared to the nuances you can get when working fully multimodal.

Let’s assume you want to use eye tracking to capture the visual attention of your respondents. That is easily done, but now you might want to know not only what they are looking at, but also what in the visual stimuli might cause an emotional reaction. For that you need facial expression analysis, which can tell you what emotional cues your respondents are displaying. So, now you know what people are feeling when they are looking at the visual stimuli, but how intense are those feelings? To capture the full picture of the emotional intensity you need to measure galvanic skin response. By measuring the electrodermal activity through a respondent’s sweat glands, it is possible to gauge the intensity of emotion a respondent is feeling when subjected to stimuli.

In short, the more modalities you apply to your research the deeper an understanding you will get of your respondents.

By combining and applying several biosensors to one research question your collected data will be more nuanced, informative, and ultimately more valuable.

Multimodal Biosensor Research in iMotions

The industry trend these days points towards multimodal research becoming the standard. At iMotions, multimodality is deeply embedded in the company’s DNA. Since the early days of the company, multimodal research hasn’t been a buzzword, but rather a foundational part of the philosophy with which we develop software for the researchers of now and tomorrow. This approach has allowed us to be at the forefront of developing and implementing multimodal biosensor integration and multi-layered data analysis for years before the trend began to point in that direction.

In the iMotions Software Platform, the multimodal setup is a standard setup through our base module, CORE. It seamlessly works with all the modalities that we offer, and it has been developed to communicate with one, two, or all biosensor inputs regardless of your research setup.

As part of my Ph.D. thesis on behavioral changes in patients with dementia, the iMotions platform enabled us to integrate eye tracking, galvanic skin response, and automated facial expression analysis simultaneously, providing us with unique and comprehensive biometric data from the same time-point for each patient. Furthermore, it provided us with an easily accessible setup for clinical testing in multiple locations in our memory clinic, enhancing feasibility of broad inclusion across different patient groups. In addition, the help of our success manager at iMotions provided us with the appropriate support for a systematic and theory-driven analysis of the data. Altogether, iMotions has provided us with unique data, as well as practical and theoretical solutions in working towards our research aims.”

– Ellen Singleton, Ph.D. student at the Alzheimer Center Amsterdam

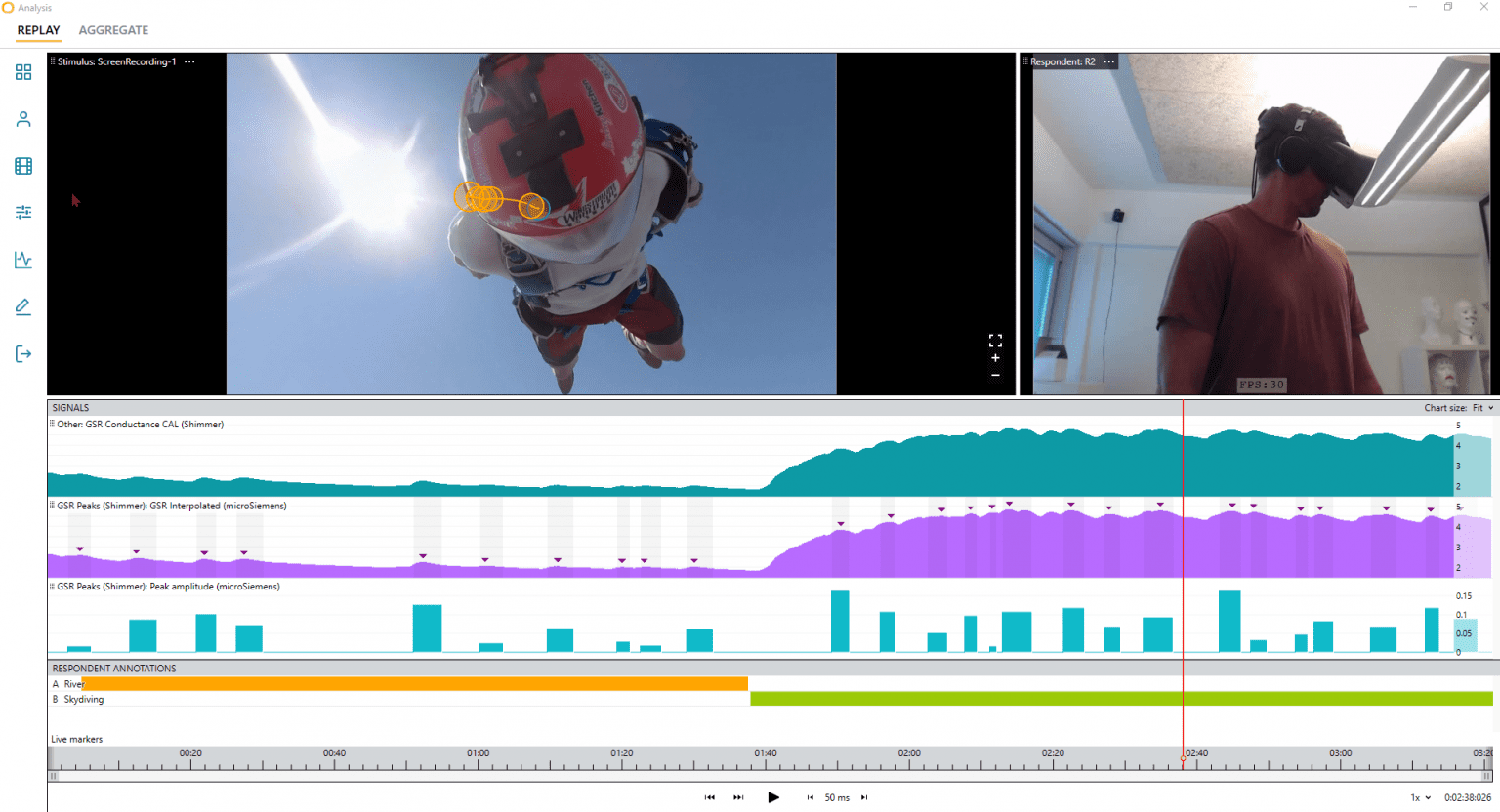

The software has been designed specifically to do most of the heavy lifting for scientists and researchers as it does most of the labor-intensive work required when using unimodal research setups, which mostly consists of large amounts of manual processing. That is because, by and large, the philosophy behind multimodal research software is that of convenience and efficiency. The ability to integrate several pieces of hardware at once could bring the risk of a muddled user interface and a confusing workflow. Therefore, a high number of data sources needs an intuitive user interface. In the iMotions software suite, we have created visualization and analysis tools that make data collection and analysis easier. The improved ease of use starts early in the workflow, with you having an easily interpretable real-time data representation of your incoming data, as seen in the picture below.

In the photo above, two modalities, VR Eye Tracking and GSR, are being used to capture the moment a skydiver takes the plunge out of a plane. As the photo shows, the stimuli presentation, multimodal data input, and real-time data visualization make this study setup decipherable to both trained human behavior researchers and laypeople alike. Ease of use is always desirable regardless of skill level, but we also see it as a perfect way to allow customers who want to conduct multimodal research but have limited experience to get started, but eventually scale up without making the UX more complex. Research complexity should come from a study setup or scale – not from the tools used.

The need for manual coding when doing signal processing and subsequent data analysis has been all but eliminated through the implementation of our custom-scripted R Notebooks. They provide fully transparent documentation of the algorithms used for signal processing, produce visible metrics for all our available modalities directly in the UI, and even allow advanced researchers to edit the code in R. If you want to dive deeper into how we improve data processing and analysis and make multimodal data analysis a seamless experience, with the help of R-Notebooks, you can read more here.

Multimodal and Multi-Platform Human Behavior Research

Over the last year, we have launched two new data collection tools: the Online Data Collection and Mobile Data Collection platforms. As the name suggests, with these two tools, data collection happens outside of the lab, either in the homes of respondents or out in the world with a respondent on the go. As with the lab-based setup, these two tools also work multimodally. Online Data Collection relies on the respondent’s webcam to conduct eye tracking and facial expression analysis, whereas the Mobile Data Collection relies on wearables such as smartwatches and fitness trackers to measure metrics like heart rate. When the data is collected, it is imported into the iMotions software and then it is treated like any other multimodal dataset because that is exactly what it is.