Virtual reality has created the possibility to experience worlds that have not, or cannot exist. This capability substantially increases the scope of experimental settings for researchers. Testing scenarios need no longer be bound by factors that would normally prevent certain experiments taking place – time, safety, budget (or even: the laws of physics). It’s possible to simulate anything in VR.

While the possibilities for testing have increased, technology has also been needed to keep up. If you want to test the attention of (for example) pilots while they experience a new flight simulation, you’ll need information about where they’re looking. This is where eye tracking in VR comes in. Below, we will introduce eye tracking in VR, and explain how it’s different to eye tracking in the real world, and walk through how it even can improve the virtual experience itself.

How does VR eye tracking work?

Eye tracking typically works by continuously measuring the distance between the pupil center and the reflection of the cornea – the distance changes depending on the angle of the eye. An infrared light, invisible to the human eye, creates this reflection while cameras record and track the movements. Computer vision algorithms are able to deduce from the angle of the eyes where the gaze is directed.

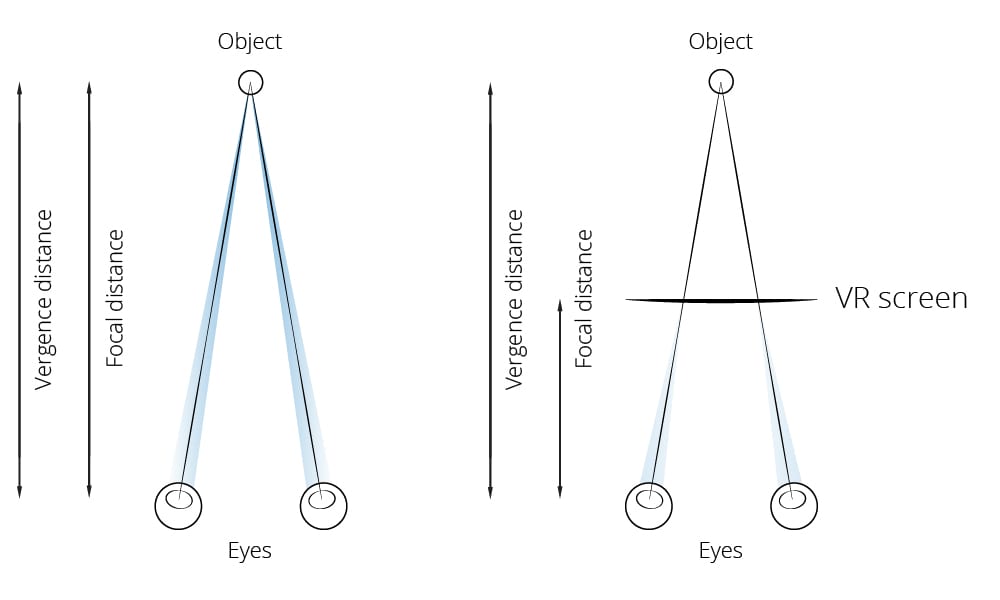

The principle is the same in VR, with one crucial difference – the eyes don’t necessarily point to where the person is looking. In the real world, the eyes display what is called “vergence” – where the angle of the eyes are directed towards a central point at which the gaze meets (see the figure below).

In an everyday setting, if a line could be drawn from the center of each eye, both would meet the same junction – the object that the person was looking at. In VR, the display is placed so close in front of the eyes that the eyes don’t necessarily display vergence, but there is still of course a perception of depth due to the 3D information presented. VR eye tracking must therefore contend with the incomplete gaze information [1].

Fortunately, while the position of the eyes doesn’t tell the whole story, we do have the missing data. By combining information about the depth of the virtual objects in the VR environment it’s possible to construct a model of what was looked at – a virtual line can be traced from the direction of the eyes into the virtual world.

Not all VR environments necessarily have this information, which precludes accurate tracking in those scenarios, but for those that do, eye tracking can be carried out.

The benefits of eye tracking in VR

As the rendering of complete virtual environments is a computationally expensive process, there is an imperative to find ways to reduce this burden, so that that processing power can be spent in other ways (e.g. to ensure a smooth experience, to expand functionality, or graphical appearance).

By using information from eye tracking in VR, it’s possible to carry out what is known as “foveated rendering” – in which only those elements of the environment that are looked at are rendered. This can reduce the processing power required, and also create a more immersive environment, in which the virtual world more closely represents the real world.

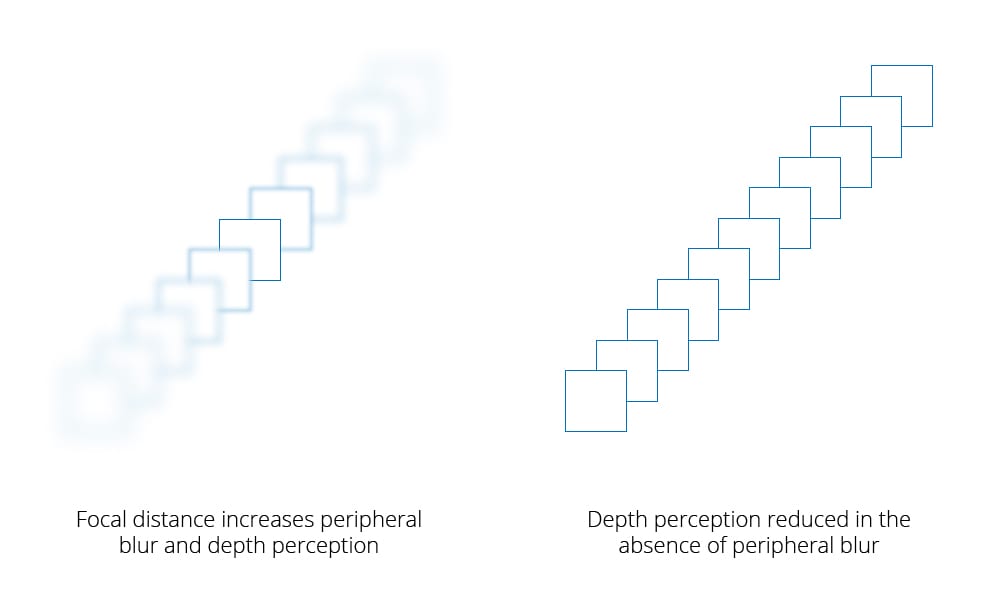

This both echoes our real world experiences in style – as our peripheral vision is largely blurred – but also by creating a more realistic sense of depth. Researchers have previously pointed out that a lack of focus blur can lead to a “different perception of size and distance of objects in the virtual environment” [1, 2]. By introducing peripheral blur, a sense of depth perception increases. This blurring is created by a process called “accommodation” – wherein the lens of the eyes adjusts its focus relative to the distance of the viewed object.

The foveated rendering can also improve the ecological validity of the experience (that is, how well an experiment mimics reality). By creating environments that are closer to real life, the behavior of participants within VR can also be assumed to be close to real life. Researchers can be increasingly confident that the results of the experiment are applicable beyond the virtual world.

This ultimately means that attentional processes can be both measured, and trusted to be more true to life as a result of eye tracking. This opens up the possibilities for understanding human behavior accurately in settings that would otherwise be too costly or impractical to expose participants to in real life.

VR Eye Tracking Research

An example of this is shown in research that used iMotions to compare different methods of instruction for working in a wet lab [3]. Participants were trained on either a desktop PC or within a virtual environment. The wet lab is an environment that is often too costly to place students in, yet it’s possible to test in a cost-effective manner how participants responded to the environment. The researchers were able to deduce that increased immersion, yet less learning took place for those in the VR setting, compared to the screen-based version.

Another example using iMotions involved participants driving a virtual car, while following an autonomously-controlled virtual car [4]. The researchers were able to expose the participants to what would have been unsafe environments if the experiment was carried out in the real world, without any risk of danger. They found that the increased comfort of the participants in relation to the autonomous car also increased the risk of a collision – a critical factor for maintaining driver safety in the presence of self-driving cars.

Further research with iMotions in VR has explored disease diagnosis on a virtual island [5], the experience of (virtual) social interaction combined with haptic feedback [6], and testing the effect of architectural designs on feelings of rejuvenation, without the cost of construction [7], among other research. For the future of research in VR – the possibilities are virtually limitless.

Download iMotions

VR Eye Tracking Brochure

iMotions is the world’s leading biosensor platform. Learn more about how VR Eye Tracking can help you with your human behavior research.

References

[1] Clay, V., König, P., König, S. (2019). Eye Tracking in Virtual Reality. Journal of Eye Movement Research, 12, (1):3

[2] Eggleston, R., Janson, W. P., & Aldrich, K. A. (1996). Virtual reality system effects on size-distance judgements in a virtual environment. Virtual Reality Annual International Symposium, 139–146. https://doi.org/10.1109/VRAIS.1996.490521

[3] Makransky, G., Terkildsen, T. S., and Mayer, R. E. (2017). Adding immersive virtual reality to a science lab simulation causes more presence but less learning. Learn. Instr. doi: 10.1016/j.learninstruc.2017.12.007

[4] Brown, B., Park, D., Sheehan, B., Shikoff, S., Solomon, J., Yang, J., Kim, I. (2018). Assessment of human driver safety at Dilemma Zones with automated vehicles through a virtual reality environment. Systems and Information Engineering Design Symposium (SIEDS), pp. 185-190

[5] Taub, M., Sawyer, R., Lester, J., Azevedo, R. (2019). The Impact of Contextualized Emotions on Self-Regulated Learning and Scientific Reasoning during Learning with a Game-Based Learning Environment. International Journal of Artificial Intelligence in Education. https://doi.org/10.1007/s40593-019-00191-1

[6] Krogmeier, C., Mousas, C., Whittinghill, D. (2019). Human, Virtual Human, Bump! A Preliminary Study on Haptic Feedback. IEEE Conference on Virtual Reality and 3D User Interfaces (VR). DOI: 10.1109/VR.2019.8798139

[7] Zou, Z., Ergan, S. (2019). A Framework towards Quantifying Human Restorativeness in Virtual Built Environments. Environmental Design Research Association (EDRA).